Hiring in the Age of Deep Faked Skills

How do you test for technical skills? How do you test for AI savvy? How do you avoid fake candidates? How do filter out bot submissions?

What Austin CTOs Are Doing Now #

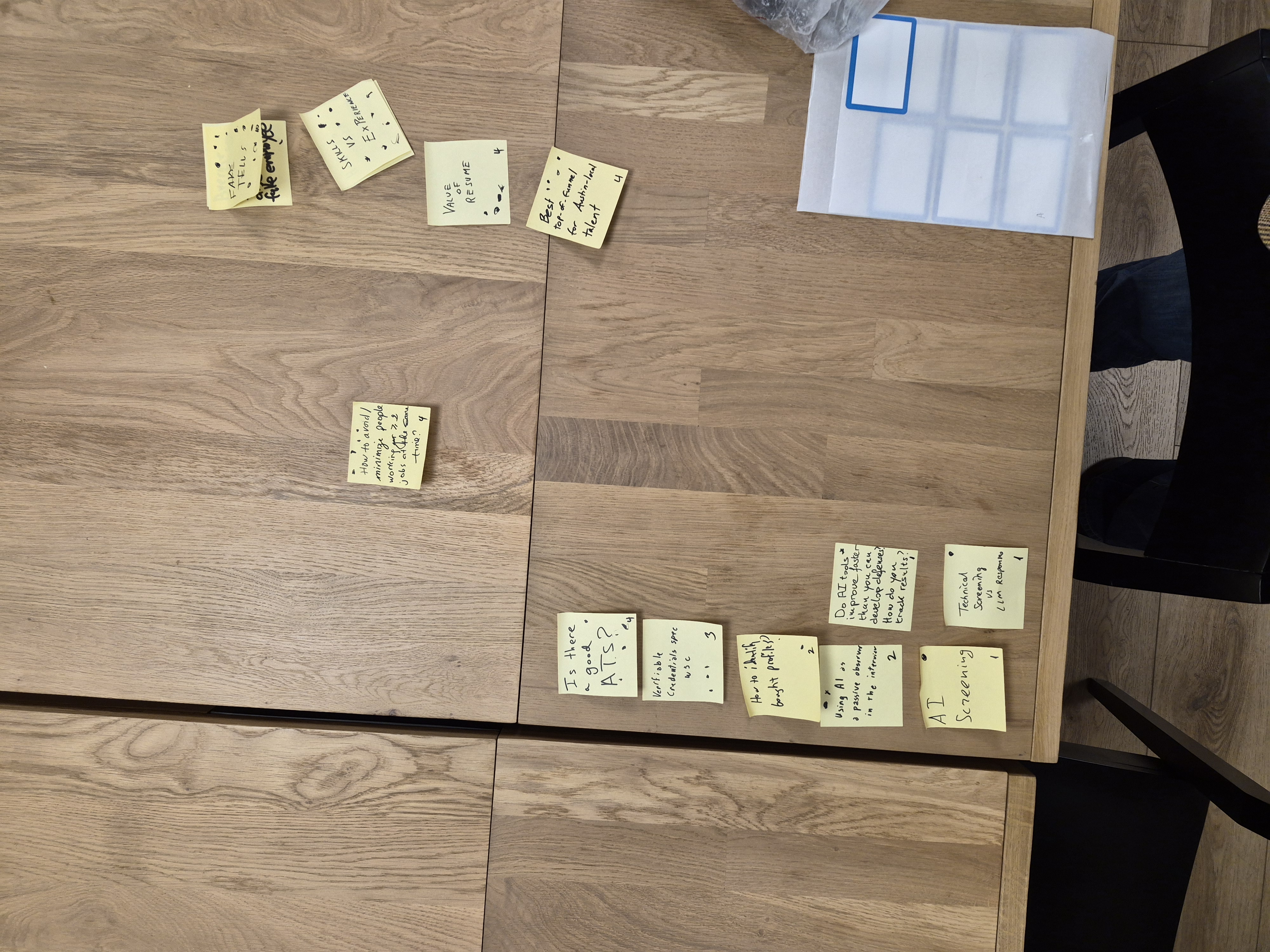

December’s Austin CTO Lean Coffee had a clear theme: “Hiring in the Age of Deep Faked Skills.”

The question on the table was not “how do we hire faster.” It was how do we hire safely when resumes can be generated, portfolios can be purchased, interviews can be proxy’d, and day-one employees might not be who they claim to be.

What came out of the room was not panic, and it was not a call to ban AI. It was something more useful: a shared recognition that hiring has become an adversarial system. This is no longer just an HR problem. It is sliding into security, operations, and systems design.

The biggest takeaway was not a single trick. It was a mindset shift:

Trust needs to move left, and verification cannot stop at the offer.

Hiring is now a trust pipeline #

A few years ago, most hiring funnels assumed good faith. You validated skills, checked references, and made a call. Today, a harsher truth keeps showing up: sometimes you are not interviewing a candidate. You are interacting with an attack surface.

AI accelerates that reality in two directions at once. It enables legitimate candidates to show up sharper, with better writing, better prep, and faster iteration. But it also enables bad actors to manufacture credibility through fake histories, fake submissions, fake presence, and proxy interviewing.

So the discussion quickly moved from “How do we grade someone?” to “How do we establish identity and continuity without becoming paranoid or unfair?”

That tension between tightening defenses and not punishing real candidates was the heartbeat of the night.

Practical defenses that still work under pressure #

The first set of ideas were practical and immediately deployable, especially for teams drowning in inbound volume.

One crowd favorite was the instruction-following canary. Cover letters used to be treated as signals of intent. Now they are often AI-assisted rewrites of the job post. Instead of asking “why us,” some teams embed a small instruction in the application flow, usually in a cover letter prompt or form field. It is not about proving brilliance. It is about filtering out low-effort automation and bulk submissions.

A phrase that stuck was “evergreen defenses.” When volume is high, you need filters that stay useful even as tooling changes.

From there the conversation broadened into “verifiable profiles.” Not in a cryptographic sense yet, but in a pattern sense. Does this person have a credible progression and a real presence? Or is the profile brand new, generic, incomplete, and conveniently polished?

Then came a more sensitive topic: validating the candidate’s environment early. Some teams check IP signals for VPN or location mismatches. The room was careful here. Signals can be noisy and ethically tricky. People travel. People use privacy tools. Remote work is messy. The point was not “geo equals truth.” The point was that when something already feels off, additional signals can help you decide where to probe.

One of the most human probes was not technical at all: local knowledge. If a candidate claims to live in Austin and the interviewer’s gut says something is weird, they ask mundane location questions. What part of town are you in? What is nearby? What is the commute like? A consistent anecdote was that offshore or proxy candidates freeze when asked for concrete local detail.

That sequence of canary, profile credibility, early environment checks, and human sanity checks felt like an emerging baseline for keeping the top of funnel usable.

You do not always catch it in the interview #

The tone in the room changed when people started swapping stories about fraud that made it through.

The sobering thread was this: sometimes the first real proof arrives after the employee has access. At that point you are no longer in a hiring conversation. You are in incident response.

Security-minded orgs described leaning on MDM, managed devices, tighter polling, and anomaly detection. One story involved a suspicious KVM setup early in employment, suggesting remote control that did not match the identity presented during hiring. Another pattern was unusually high download behavior compared to peers, which triggered alarms. Another was a “never on camera” pattern. By itself it is not evidence, but when combined with other inconsistencies it becomes meaningful.

The group was realistic about tradeoffs. Many companies do not have bandwidth for continuous biometric verification. And assuming “MDM will stop state actors” is wishful thinking. Still, MDM and device telemetry can help with a narrower problem: detecting behavior that does not match what you expect from a legitimate employee.

That naturally led into stronger identity primitives. YubiKeys came up as a practical proof mechanism. Verifiable credentials also came up, specifically W3C-style concepts, as a longer-term direction. Not as “we all do this tomorrow,” but as a signal that hiring may borrow the same evolution security did: moving from soft trust toward cryptographic proof.

Why old signals are collapsing #

Once LLMs entered the conversation, the room kept describing the same failure mode in different ways.

People can look experienced. People can sound experienced. People can deliver decent outputs. But some cannot explain tradeoffs, failure modes, or what they would do when reality gets messy.

AI has widened the gap between answers and judgment. Answers are cheap. Judgment is not.

That reframed the “skills vs experience” question. Experience artifacts like resume bullets, polished narratives, and take-home submissions are easier to manufacture now. So the group’s preferred shift was toward evaluating reasoning continuity. Can you defend your approach under constraints? Can you explain alternatives and why you did not choose them? Can you debug and narrate your thinking without collapsing? Do you notice the production gotchas?

Take-homes were the poster child here. Not because they are useless, but because they no longer prove what they used to. Candidates can produce a clean solution with AI assistance and still struggle when asked to walk through tradeoffs. Several people noted the mismatch: strong take-home performance paired with weak conceptual reasoning when put on the spot.

The meetup also acknowledged a darker angle: interviews themselves can be attacked. Proxy interviewing, expert interviewers, and bait-and-switch arrangements were all discussed. In that world, what matters is not only your questions. It is whether your process establishes continuity between the person who interviewed and the person who ships.

That is why some teams are debating more controlled settings, including in-person interviews for certain roles. Not as nostalgia, but as a defense against identity substitution.

Do not ban AI, hire people who can use it #

Nobody advocated for “no AI.” If anything, the direction felt almost opposite.

We want candidates who can use AI to build. We just do not want people who collapse when the scaffolding disappears.

That distinction kept coming up: AI-assisted builder versus AI-dependent performer.

Some teams are experimenting with using AI during interviews as a passive observer that summarizes, captures inconsistencies, and suggests follow-ups. Not “AI decides hire or no-hire,” but “AI helps the interviewer stay consistent and curious.” The nuance here was important: AI can reliably improve the interviewer’s workflow even if it cannot reliably detect truth in a candidate.

When someone asked the obvious question, “Do AI tools improve faster than defenses?” the room’s answer was essentially this: stop chasing tools and build around invariants. Continuity. Tradeoffs. Debugging. Production thinking. Identity.

The resume is collapsing, and meetups are back in fashion #

The resume took the most heat. Several people argued that resumes have become less a credential and more a marketing artifact. Too easy to tailor, too easy to generate, too easy to fake. In that framing, resumes are useful as conversation prompts, not as truth.

The more interesting shift was what replaced them: human networks.

In Austin, at least one company claimed that a large share of hires came through meetup relationships. It is not perfect. Referrals can be gamed too, especially when incentives create spammy behavior. But community presence restores something that transactional job pipelines often lose: context.

When everything can be fabricated, showing up consistently becomes a kind of liveness signal.

The adjacent thread: multiple jobs and tolerance #

One Lean Coffee thread that fit the theme more than expected was “people working two jobs.” Not always malicious, but still a trust and risk problem.

The room did not land on heavy surveillance as the answer. Monitoring can backfire, invade privacy, and erode trust. Instead, people focused on defining what actually matters: conflicts of interest as the hard line, IP and data security as non-negotiable, and results and availability as the operational core.

On small teams, tolerance is lower because one disengaged person can collapse velocity and morale. A practical lever came up more than once: skin in the game. Equity, ownership, and real responsibility can reduce the incentive to split attention.

What the room actually agreed on #

If you compress the whole night into one arc, it is this:

Hiring has become adversarial, so the funnel has to become intentional.

A modern approach looks like layered defense:

- Cheap top-of-funnel filters to stop automation and obvious fraud.

- Interview loops that test reasoning and judgment, not just outputs.

- Continuity checks that reduce proxy and bait-and-switch attacks.

- Post-hire monitoring that is proportional and privacy-aware, because some threats only show up after access.

- Community and intel sharing, because no single company sees the whole pattern.

The point is not paranoia. The point is robustness.

In the age of deep-faked skills, the real question is no longer “can they produce code?”

It is: are they real, are they consistent, and can they reason when it counts?